In August 2024, 39.4% of U.S. adults were using Generative AI. Among them, 28% of employed individuals were incorporating it into their daily workflows, over one in ten using it every day.

If this sounds like a lot, it’s because it is. Generative AI is being adopted at a pace faster than both PCs and the internet — reaching 39.5% adoption in just two years, compared to 20% for those earlier technologies.

And it’s not just being used more — Generative AI is making people more productive, with gains of up to 25% in tasks like writing, coding, and data analysis across various industries.

Despite these advancements, the vast majority of human-machine interactions still occur in basic, general-purpose chatboxes. As this technology matures, we will see that these initial productivity gains are just the tip of the iceberg. The future lies in designing more intelligent AI systems that move beyond basic chatboxes — enhancing overall productivity by providing richer, more contextual experiences.

In the shorter term, the opportunity is clear: how can brands and AI toolmakers elevate this experience? How do you design tools that not only work seamlessly but also speak your brand’s language and meet user needs? Right now, your enterprise should be focusing on designing for Conversational AI — AI interfaces that deliver meaningful, user-centric interactions that help deliver on the task at hand.

Conversational AI means intelligent systems designed to interact with users through natural language.

What is Conversational AI?

In a nutshell, Conversational AI means the creation of chatbots and other AI agents that we can interact with in a natural way. These tools understand speech, interpret text, and translate languages seamlessly through multimodal inputs to create more intuitive experiences. It’s AI speaking your language — or many other languages now for that matter.

Think of language as an interface. Every word is a touchpoint.

This is important. When it comes to Generative AI, the real breakthrough wasn’t just the intelligence — it was giving intelligence a user interface. Tools like ChatGPT brought AI into the hands of millions by making it accessible. Without this intuitive interaction, the full potential of Generative AI might not have been realised.

This puts Conversational AI design at the heart of the AI conversation. While Large Language Models (LLMs) can understand complex queries, tap into knowledge bases, and generate smart, justified recommendations, the real opportunity now is taking these systems further — designing them to feel human, reflect your brand’s voice, and deliver genuine value in every domain in order to help facilitate adoption and boost productivity.

The Role of Language in Conversational AI User Experience (UX)

In Conversational AI, words are a core part of the user experience (UX). The tone, style, and clarity of an AI’s language can either elevate or undermine the entire interaction.

Think of language as an interface. Every word is a touchpoint. Whether external (customer-facing) or internal (employee-centric), the language should adapt to the audience, remaining clear, concise, and human.

This is where NLU-first design steps in. NLU-first (Natural Language Understanding) design focuses not just on recognising intents but on understanding the user’s overarching meaning and goals. Instead of following fixed scripts, NLU-first AI systems interpret and respond based on a deeper understanding of the context behind the user’s intent.

With NLU-first systems, AI can better handle unpredictable or unsupported paths that users might take — the long tail of interactions — by understanding more than just the literal meaning of the words. This deeper understanding helps create more human-like and intuitive interactions, and ensures that the language used by AI enhances the overall user experience.

NLU-first design focusses on the user’s overarching meaning and goals.

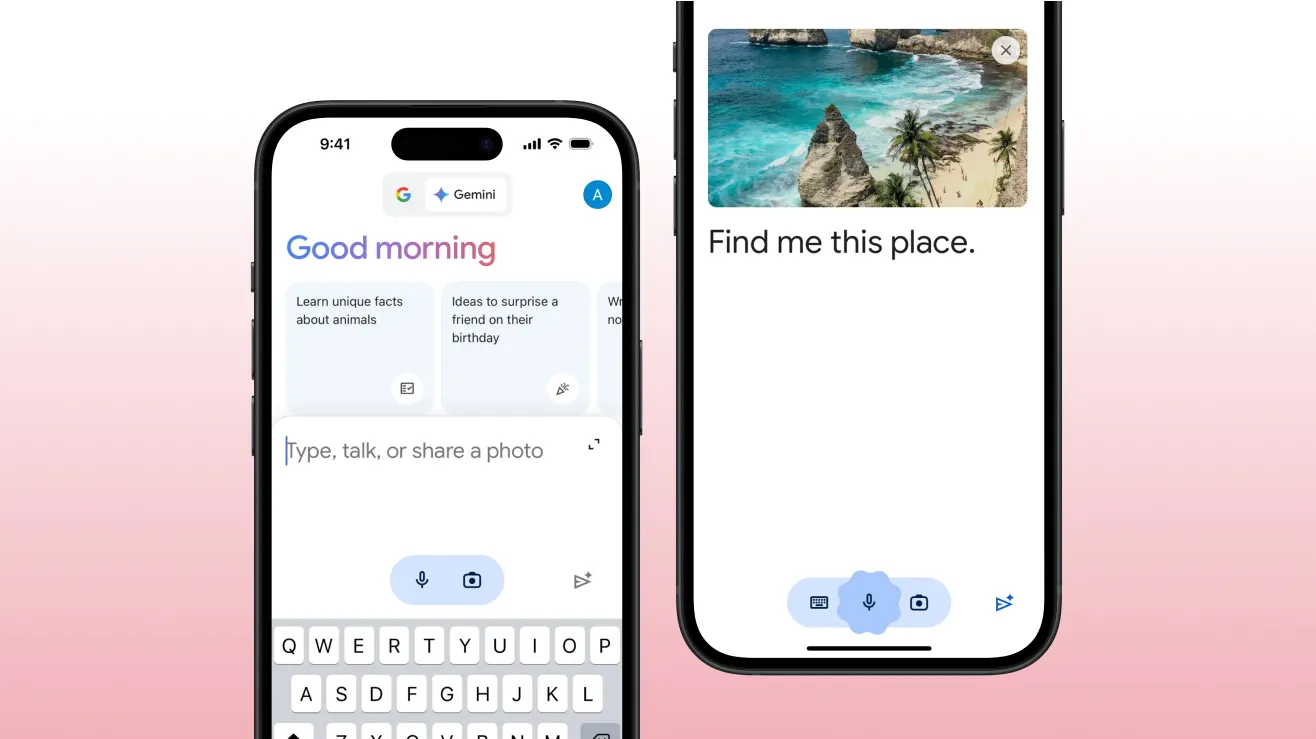

Designing for Multimodal Interactions in Conversational AI

As multimodal AI systems become more entrenched in day-to-day and enterprise operations, how we design for interacting with these systems will evolve as well.

In most examples of Conversational AI, intention — the act of communicating a specific goal or need — has typically been expressed through voice or text. But as multimodal interactions become more common, intention will be conveyed through a range of inputs (gestures, facial expressions etc). This evolution means that AI systems will need to recognise and interpret not just what users say, but in how they behave.

For businesses and designers, this presents a major opportunity. Done right, multimodal design expands the ways users interact with AI systems, creating more seamless experiences that respond to a broader range of cues and enhance efficiency.

However, designing for multimodal interactions comes with its own challenges. One such issue is the presence of spurious correlations in AI systems. These occur when multimodal AI models, like OpenAI’s CLIP, mistakenly associate unrelated elements in the data, such as assuming that a pacifier must always appear with a baby. These faulty associations can result in AI systems drawing the wrong conclusions about user behaviour or input, particularly when multiple input modalities are involved.

On top of this, even at the cutting-edge, old school problems remain: as AI systems become more capable of handling complex inputs, the challenge of latency will become even more pronounced. Most users expect immediate feedback, and any delay in response can disrupt the flow of interaction and hinder adoption. Fortunately, even here there are opportunities to design well for Conversational AI.

Leveraging Latency to Simulate AI Thinking and Build Trust

Research has found that users often interpret brief pauses in dialogue as the AI “thinking.” This anthropomorphising creates an illusion of human-like interaction, building trust and engagement.

These human-like behaviours build trust and deepen engagement. Brief pauses allow users to process interactions, creating a sense of anticipation and making the eventual response more satisfying. In fact, studies have long demonstrated that slight delays mimic human conversational norms, making users feel that the AI is carefully considering their input.

This creates a design opportunity. By embracing the perception of AI “thinking,” designers can make interactions feel more thoughtful and less transactional. As shown with GPT-o1, displaying the AI’s chain-of-thought not only fills that brief wait time but transforms latency into part of the experience.

Visual or textual cues — like a simple “Let me find that for you…” — can maintain the illusion of fluid conversation while the system retrieves information. Done right, latency isn’t a weakness; it’s a feature that enhances user trust and keeps the dialogue natural.

But while we can design for these subtle, subjective experiences, the real challenge lies in addressing objective errors in something as unpredictable as AI. In systems that are non-deterministic by nature, how do we ensure accuracy, consistency, and reliability in responses?

Certain guardrails such as having an LLM-as-a-Judge prevent chatbots from venturing outside predetermined topics.

Case Studies: How DoorDash & Klarna Leverage Conversational AI

At the food-delivery company DoorDash, their AI-driven support system for Dashers — the independent contractors who pick up orders from merchants and deliver them to customers — combines the power of a Retrieval Augmented Generation (RAG) system with real-time validation to ensure responses are accurate and relevant. The RAG system retrieves the most pertinent information from a vast knowledge base, generating responses that directly address a Dasher’s specific inquiry.

To ensure these responses are both accurate and on-brand, DoorDash uses a system called LLM-as-a-Judge. This system evaluates the chatbot’s output in real time, verifying the correctness of the information, coherence of the conversation, and ensuring no off-topic or misleading replies slip through. The LLM Judge acts as a second layer of review, catching potential hallucinations and improving the quality of responses. If the AI-generated response doesn’t meet the required standards, it’s flagged, corrected, or escalated to human support.

Similarly, Klarna’s AI system places heavy emphasis on clear, functional language, with certain guardrails — likely another LLM Judge — preventing the chatbot from venturing outside predetermined topics, ensuring it stays focused on delivering useful, brand-aligned answers. When faced with complex or off-script queries, the system promptly hands off to a human agent, bringing a human into the loop and ensuring that the language and the user experience remain seamless.

The Future of Conversational AI: Memory and Personalisation

Traditionally, AI systems engage with users in isolated sessions, starting fresh with every conversation. However even here we are beginning to see breakthroughs in how AI agents use long-term memory to remember past interactions and learn continuously.

London-based startup Convergence’s Proxy AI for instance applies memory to automate workflows for employees and assist consumers with everyday tasks. This ability to retain and apply information across sessions brings Conversational AI closer to human-like interaction, where remembering preferences and past exchanges leads to more personalised experiences. OpenAI is also integrating memory into its systems, rolling out Advanced Voice and enabling AI to remember instructions across multiple interactions.

What is clear then, is that memory is fast becoming one of the main pillars behind Conversational AI. From personal assistants that adapt to your habits to business tools that anticipate needs, memory will push Conversational AI even further beyond the transactional, helping businesses and individuals alike to work smarter and more efficiently.

If you’re looking to design, build and deploy your own Conversational AI product, remember this: designing for Conversational AI is about creating an experience that feels natural, intuitive, and reliable.

Whether it’s incorporating latency to simulate thinking, adding memory for more personalised interactions, or using robust retrieval systems like RAG for accuracy, the future of AI lies in intelligent interfaces that replicate the nuances of human conversation and deliver real value.

Get in touch to learn more.